Finally after a month of relentless trial and error, I've successfully created an AI persona bot emulating the teaching style of my mentor @abnux, the founder of @10kdesigners . Excited to share my journey in this twitter thread. 🤖🎨 "Sound on 🎙️🔊"

Trusted and used by teams around the globe

Iterate, fast

Developing LLM apps takes countless iterations. With low code approach, we enable quick iterations to go from testing to production

- $ npm install -g flowise

- $ npx flowise start

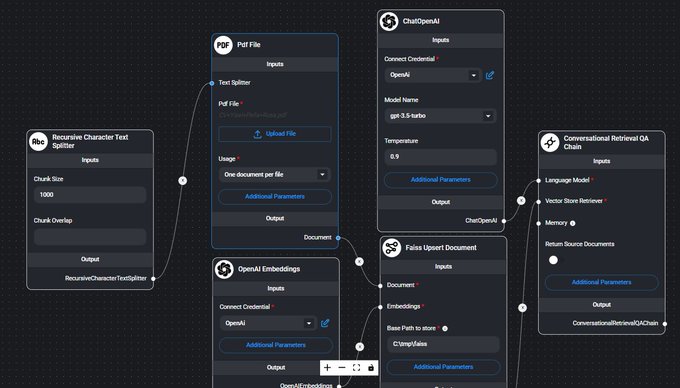

LLM Orchestration

Connect LLMs with memory, data loaders, cache, moderation and many more

- Langchain

- LlamaIndex

- 100+ integrations

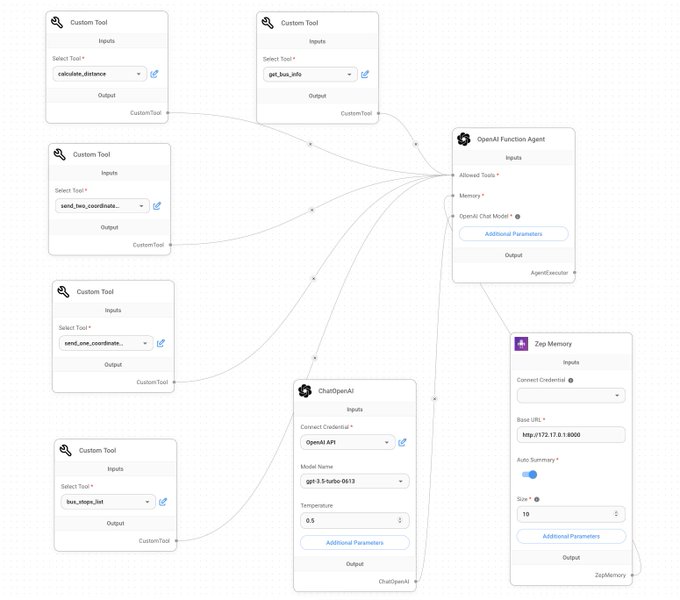

Agents & Assistants

Create autonomous agent that can uses tools to execute different tasks

- Custom Tools

- OpenAI Assistant

- Function Agent

- import requests

- url = "/api/v1/prediction/:id"

- def query(payload):

- response = requests.post(

- url,

- json = payload

- )

- return response.json()

- output = query({

- question: "hello!"

- )}

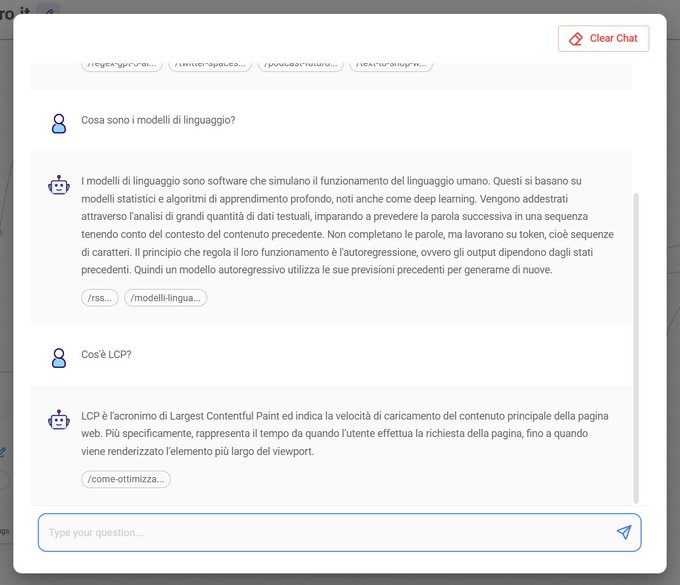

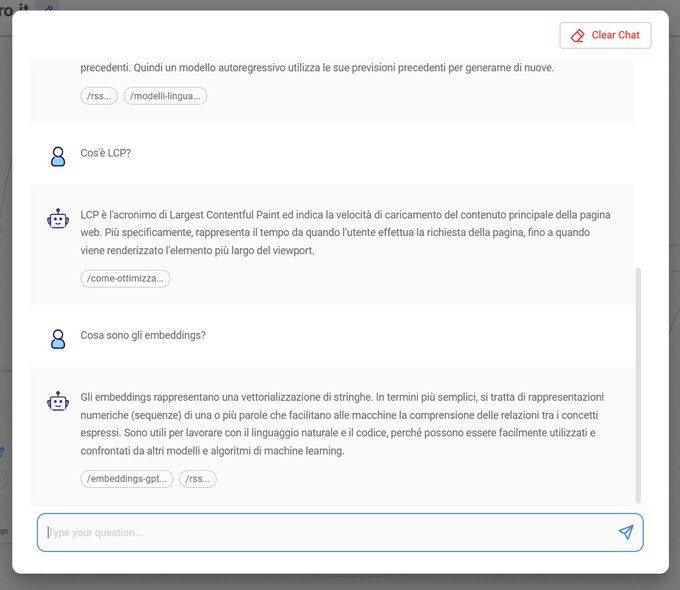

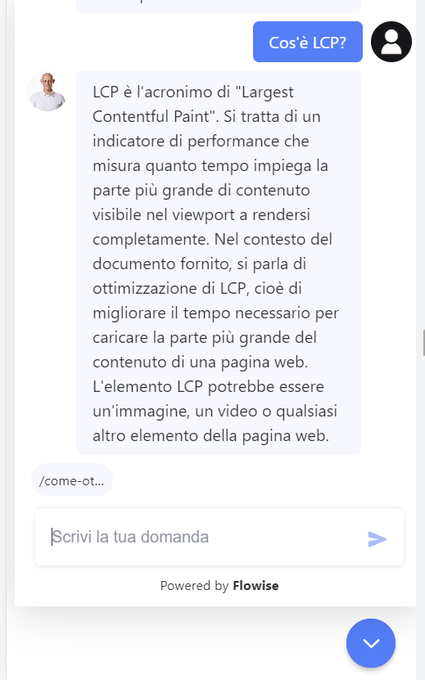

API, SDK, Embed

Extend and integrate to your applications using APIs, SDK and Embedded Chat

- APIs

- Embedded Widget

- React SDK

Open source LLMs

Run in air-gapped environment with local LLMs, embeddings and vector databases

- HuggingFace, Ollama, LocalAI, Replicate

- Llama2, Mistral, Vicuna, Orca, Llava

- Self host on AWS, Azure, GCP

Use Cases

One platform, endless possibilities. See some of the use cases

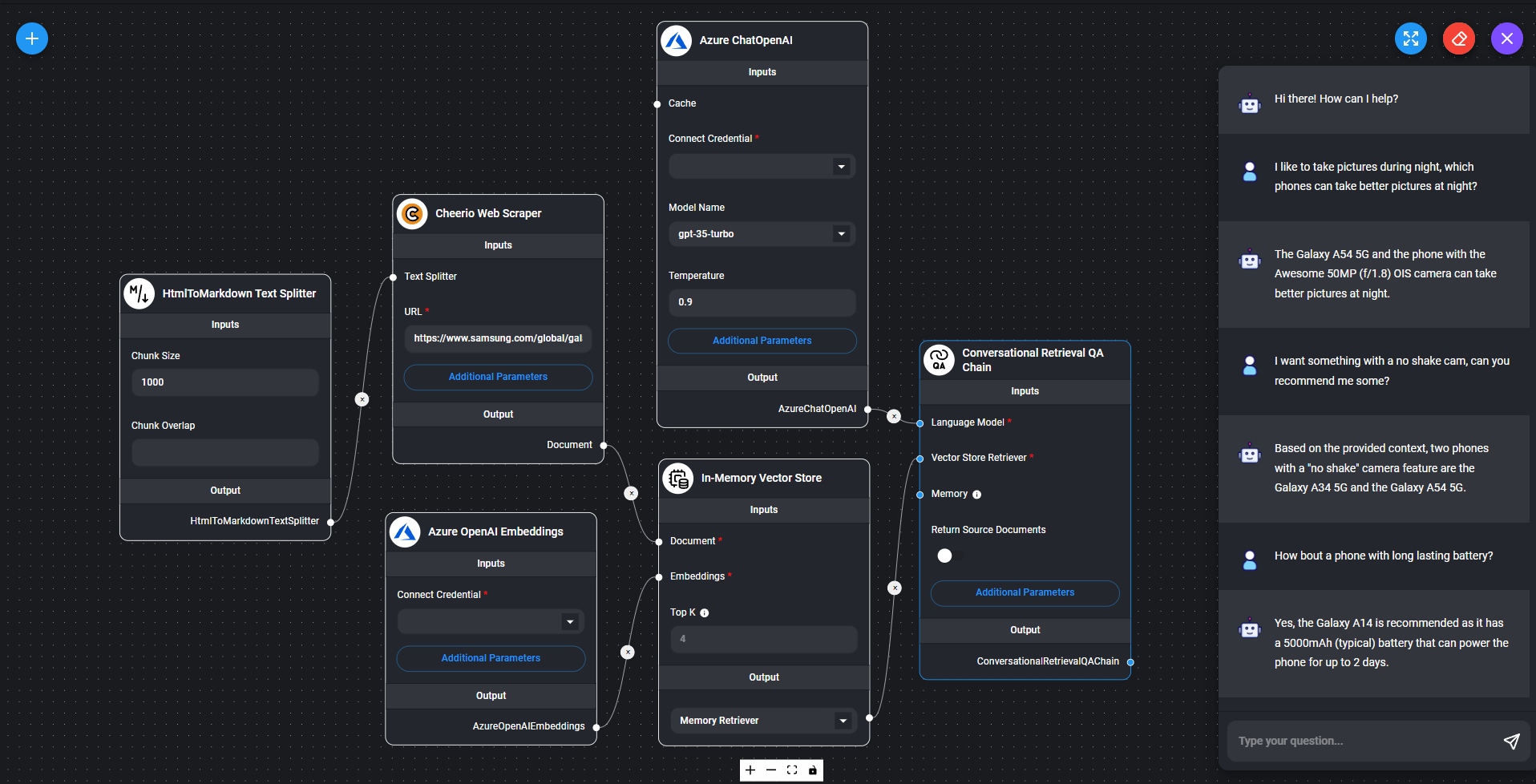

Product catalog chatbot to answer any questions related to the products

Pricing

Free 14 day trial. No credit card required.

Community 🫶

Open source community is the heart of Flowise. See why developers love and build using Flowise

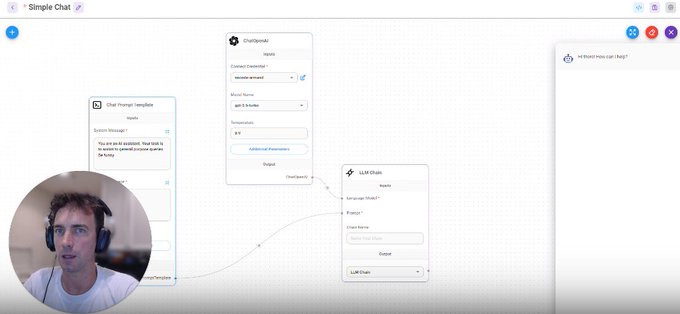

Flowise is trending on GitHub It's an open-source drag & drop UI tool that lets you build custom LLM apps in just minutes. Powered by LangChain, it features: - Ready-to-use app templates - Conversational agents that remember - Seamless deployment on cloud platforms

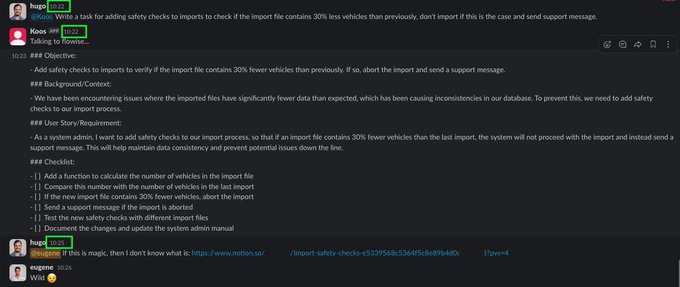

Using our new AI bot called Koos with @FlowiseAI to create project management tasks in Notion, right from Slack 🤯 Let me know who would like to see a 5min explainer on how we did this 🎉

Flowise just reached 12,000 stars on Github. It allows you to build customized LLM apps using a simple drag & drop UI. You can even use built-in templates with logic and conditions connected to LangChain and GPT: ▸ Conversational agent with memory ▸ Chat with PDF and Excel

A multi-modal chatbot that effortlessly merges text and image generation into seamless conversations. 🚀 📢 Watch the demo with conversation starting with asking for advice on building strong financial habits (sped up slightly for demo) 🪄Chatbot magically generates a visual

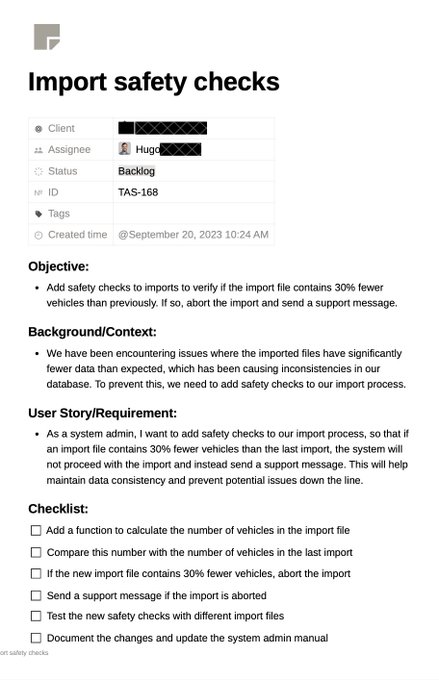

Create your first custom chatbot using LLMs in 5 min! No-coding required. We will use @FlowiseAI Join here to get the step-by-step tutorial and video tomorrow at 7 a.m. ET: nocode.ai

Flowise is seriously impressive. Not only for quickly building and deploying LLM apps, but for visualizing chains

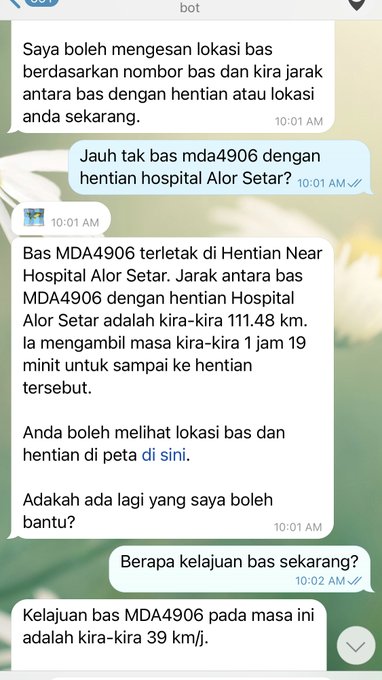

Thanks to @FlowiseAI & @LangChainAI, it's so easy to create Telegram Bot that capable of getting real-time bus info (location & speed) from GPS device. Plus more functionality to calculate distance, duration & presenting map to user. @OpenAI Function Calling is awesome too 👍

If you are building in AI and not using @FlowiseAI - you are seriously wasting your time. It's like Figma but for backend AI applications. Design & test your entire stack in less than an hour. Even test MVP. Build to industrial scale with the exported output.

Chatea Gratis con tus PDF sin código !!! En este hilo te muestro como crear un chat gpt personalizado sin una linea de código que puedes incluir en tu web Usamos @FlowiseAI que es una increíble herramienta para crear aplicaciones LLM con #nocode. Empezamos🧵

AutoGen = LLM多代理框架 👨👩👧👦 Flowise = 无代码LLM工作流构建平台 0️⃣ 由 @FlowiseAI 负责构建功能单元;由AutoGen负责调度协作,任务执行。 嗯!有点美妙! 🎏 🎀 🪄 🪅 🎊 🎉 AutoGen + Flowise = 零代码平台上的超级AI助理 视频 👇 youtu.be/gKNR1ghU1Ao?si… #AutoGen #Flowise #OpenAI

Webinars

Learn how to use Flowise from different webinar series with our partners

Enterprise

Looking for specific use cases and support?